Description

Efnisyfirlit

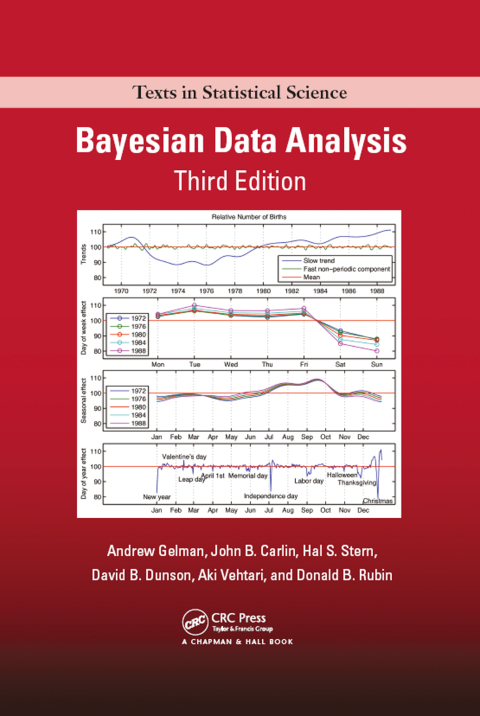

- Cover

- Half-Title Page

- Title Page

- Copyright Page

- Table of Contents

- Preface

- Part I Fundamentals of Bayesian Inference

- Chapter 1 Probability and inference

- 1.1 The three steps of Bayesian data analysis

- 1.2 General notation for statistical inference

- Parameters, data, and predictions

- Observational units and variables

- Exchangeability

- Explanatory variables

- Hierarchical modeling

- 1.3 Bayesian inference

- Bayes’ rule

- Prediction

- Likelihood

- Likelihood and odds ratios

- 1.4 Discrete examples: genetics and spell checking

- Inference about a genetic status

- Spelling correction

- 1.5 Probability as a measure of uncertainty

- Subjectivity and objectivity

- 1.6 Example: probabilities from football point spreads

- Football point spreads and game outcomes

- Assigning probabilities based on observed frequencies

- A parametric model for the difference between outcome and point spread

- Assigning probabilities using the parametric model

- 1.7 Example: calibration for record linkage

- Existing methods for assigning scores to potential matches

- Estimating match probabilities empirically

- External validation of the probabilities using test data

- 1.8 Some useful results from probability theory

- Modeling using conditional probability

- Means and variances of conditional distributions

- Transformation of variables

- 1.9 Computation and software

- Summarizing inferences by simulation

- Sampling using the inverse cumulative distribution function

- Simulation of posterior and posterior predictive quantities

- 1.10 Bayesian inference in applied statistics

- 1.11 Bibliographic note

- 1.12 Exercises

- Chapter 2 Single-parameter models

- 2.1 Estimating a probability from binomial data

- Prediction

- 2.2 Posterior as compromise between data and prior information

- 2.3 Summarizing posterior inference

- Posterior quantiles and intervals

- 2.4 Informative prior distributions

- Binomial example with different prior distributions

- Conjugate prior distributions

- Nonconjugate prior distributions

- Conjugate prior distributions, exponential families, and sufficient statistics

- 2.5 Normal distribution with known variance

- Likelihood of one data point

- Conjugate prior and posterior distributions

- Posterior predictive distribution

- Normal model with multiple observations

- 2.6 Other standard single-parameter models

- Normal distribution with known mean but unknown variance

- Poisson model

- Poisson model parameterized in terms of rate and exposure

- Exponential model

- 2.7 Example: informative prior distribution for cancer rates

- A puzzling pattern in a map

- Bayesian inference for the cancer death rates

- Relative importance of the local data and the prior distribution

- Constructing a prior distribution

- 2.8 Noninformative prior distributions

- Proper and improper prior distributions

- Improper prior distributions can lead to proper posterior distributions

- Jeffreys’ invariance principle

- Various noninformative prior distributions for the binomial parameter

- Pivotal quantities

- Difficulties with noninformative prior distributions

- 2.9 Weakly informative prior distributions

- Constructing a weakly informative prior distribution

- 2.10 Bibliographic note

- 2.11 Exercises

- Chapter 3 Introduction to multiparameter models

- 3.1 Averaging over ‘nuisance parameters’

- 3.2 Normal data with a noninformative prior distribution

- A noninformative prior distribution

- The joint posterior distribution, p

- The conditional posterior distribution, p

- The marginal posterior distribution, p

- Sampling from the joint posterior distribution

- Analytic form of the marginal posterior distribution of µ

- Posterior predictive distribution for a future observation

- 3.3 Normal data with a conjugate prior distribution

- A family of conjugate prior distributions

- The joint posterior distribution, p

- The conditional posterior distribution, p

- The marginal posterior distribution, p

- Sampling from the joint posterior distribution

- Analytic form of the marginal posterior distribution

- 3.4 Multinomial model for categorical data

- 3.5 Multivariate normal model with known variance

- Multivariate normal likelihood

- Conjugate analysis

- 3.6 Multivariate normal with unknown mean and variance

- Conjugate inverse-Wishart family of prior distributions

- Different noninformative prior distributions

- Scaled inverse-Wishart model

- 3.7 Example: analysis of a bioassay experiment

- The scientific problem and the data

- Modeling the dose-response relation

- The likelihood

- The prior distribution

- A rough estimate of the parameters

- Obtaining a contour plot of the joint posterior density

- Sampling from the joint posterior distribution

- The posterior distribution of the LD50

- 3.8 Summary of elementary modeling and computation

- 3.9 Bibliographic note

- 3.10 Exercises

- Chapter 4 Asymptotics and connections to non-Bayesian approaches

- 4.1 Normal approximations to the posterior distribution

- Normal approximation to the joint posterior distribution

- Interpretation of the posterior density function relative to its maximum

- Summarizing posterior distributions by point estimates and standard errors

- Data reduction and summary statistics

- Lower-dimensional normal approximations

- 4.2 Large-sample theory

- Notation and mathematical setup

- Asymptotic normality and consistency

- Likelihood dominating the prior distribution

- 4.3 Counterexamples to the theorems

- 4.4 Frequency evaluations of Bayesian inferences

- Large-sample correspondence

- Point estimation, consistency, and efficiency

- Confidence coverage

- 4.5 Bayesian interpretations of other statistical methods

- Maximum likelihood and other point estimates

- Unbiased estimates

- Confidence intervals

- Hypothesis testing

- Multiple comparisons and multilevel modeling

- Nonparametric methods, permutation tests, jackknife, bootstrap

- 4.6 Bibliographic note

- 4.7 Exercises

- Chapter 5 Hierarchical models

- 5.1 Constructing a parameterized prior distribution

- Analyzing a single experiment in the context of historical data

- Logic of combining information

- 5.2 Exchangeability and hierarchical models

- Exchangeability

- Exchangeability when additional information is available on the units

- Objections to exchangeable models

- The full Bayesian treatment of the hierarchical model

- The hyperprior distribution

- Posterior predictive distributions

- 5.3 Bayesian analysis of conjugate hierarchical models

- Analytic derivation of conditional and marginal distributions

- Drawing simulations from the posterior distribution

- Application to the model for rat tumors

- 5.4 Normal model with exchangeable parameters

- The data structure

- Constructing a prior distribution from pragmatic considerations

- The hierarchical model

- The joint posterior distribution

- The conditional posterior distribution of the normal means, given the hyperparameters

- The marginal posterior distribution of the hyperparameters

- Computation

- Posterior predictive distributions

- Difficulty with a natural non-Bayesian estimate of the hyperparameters

- 5.5 Example: parallel experiments in eight schools

- Inferences based on nonhierarchical models and their problems

- Posterior simulation under the hierarchical model

- Results

- Discussion

- 5.6 Hierarchical modeling applied to a meta-analysis

- Defining a parameter for each study

- A normal approximation to the likelihood

- Goals of inference in meta-analysis

- What if exchangeability is inappropriate?

- A hierarchical normal model

- Results of the analysis and comparison to simpler methods

- 5.7 Weakly informative priors for variance parameters

- Concepts relating to the choice of prior distribution

- Classes of noninformative and weakly informative prior distributions for hierarchical variance parameters

- Application to the 8-schools example

- Weakly informative prior distribution for the 3-schools problem

- 5.8 Bibliographic note

- 5.9 Exercises

- Part II Fundamentals of Bayesian Data Analysis

- Chapter 6 Model checking

- 6.1 The place of model checking in applied Bayesian statistics

- Sensitivity analysis and model improvement

- Judging model flaws by their practical implications

- 6.2 Do the inferences from the model make sense?

- External validation

- Choices in defining the predictive quantities

- 6.3 Posterior predictive checking

- Notation for replications

- Test quantities

- Tail-area probabilities

- Choosing test quantities

- Multiple comparisons

- Interpreting posterior predictive p-values

- Limitations of posterior tests

- P-values and u-values

- Model checking and the likelihood principle

- Marginal predictive checks

- 6.4 Graphical posterior predictive checks

- Direct data display

- Displaying summary statistics or inferences

- Residual plots and binned residual plots

- General interpretation of graphs as model checks

- 6.5 Model checking for the educational testing example

- Assumptions of the model

- Comparing posterior inferences to substantive knowledge

- Posterior predictive checking

- Sensitivity analysis

- 6.6 Bibliographic note

- 6.7 Exercises

- Chapter 7 Evaluating, comparing, and expanding models

- 7.1 Measures of predictive accuracy

- Predictive accuracy for a single data point

- Averaging over the distribution of future data

- Evaluating predictive accuracy for a fitted model

- Choices in defining the likelihood and predictive quantities

- 7.2 Information criteria and cross-validation

- Estimating out-of-sample predictive accuracy using available data

- Log predictive density asymptotically, or for normal linear models

- Akaike information criterion (AIC)

- Deviance information criterion (DIC) and effective number of parameters

- Watanabe-Akaike or widely applicable information criterion (WAIC)

- Effective number of parameters as a random variable

- Bayesian’ information criterion (BIC)

- Leave-one-out cross-validation

- Comparing different estimates of out-of-sample prediction accuracy

- 7.3 Model comparison based on predictive performance

- Evaluating predictive error comparisons

- Bias induced by model selection

- Challenges

- 7.4 Model comparison using Bayes factors

- 7.5 Continuous model expansion

- Sensitivity analysis

- Adding parameters to a model

- Accounting for model choice in data analysis

- Selection of predictors and combining information

- Alternative model formulations

- Practical advice for model checking and expansion

- 7.6 Implicit assumptions and model expansion: an example

- 7.7 Bibliographic note

- 7.8 Exercises

- Chapter 8 Modeling accounting for data collection

- 8.1 Bayesian inference requires a model for data collection

- Generality of the observed- and missing-data paradigm

- 8.2 Data-collection models and ignorability

- Notation for observed and missing data

- Stability assumption

- Fully observed covariates

- Data model, inclusion model, and complete and observed data likelihood

- Joint posterior distribution of parameters θ from the sampling model and I from the missing-data model

- Finite-population and superpopulation inference

- Ignorability

- ‘Missing at random’ and ‘distinct parameters’

- Ignorability and Bayesian inference under different data-collection schemes

- Propensity scores

- Unintentional missing data

- 8.3 Sample surveys

- Simple random sampling of a finite population

- Stratified sampling

- Cluster sampling

- Unequal probabilities of selection

- 8.4 Designed experiments

- Completely randomized experiments

- Randomized blocks, Latin squares, etc.

- Sequential designs

- Including additional predictors beyond the minimally adequate summary

- 8.5 Sensitivity and the role of randomization

- Complete randomization

- Randomization given covariates

- Designs that ‘cheat’

- Bayesian analysis of nonrandomized studies

- 8.6 Observational studies

- Comparison to experiments

- Bayesian inference for observational studies

- Causal inference and principal stratification

- Complier average causal effects and instrumental variables

- Bayesian causal inference with noncompliance

- 8.7 Censoring and truncation

- 1. Data missing completely at random

- 2. Data missing completely at random with unknown probability of missingness

- 3. Censored data

- 4. Censored data with unknown censoring point

- 5. Truncated data

- 6. Truncated data with unknown truncation point

- More complicated patterns of missing data

- 8.8 Discussion

- 8.9 Bibliographic note

- 8.10 Exercises

- Chapter 9 Decision analysis

- 9.1 Bayesian decision theory in different contexts

- Bayesian inference and decision trees

- Summarizing inference and model selection

- 9.2 Using regression predictions: survey incentives

- Background on survey incentives

- Data from 39 experiments

- Setting up a Bayesian meta-analysis

- Inferences from the model

- Inferences about costs and response rates for the Social Indicators Survey

- Loose ends

- 9.3 Multistage decision making: medical screening

- Example with a single decision point

- Adding a second decision point

- 9.4 Hierarchical decision analysis for home radon

- Background

- The individual decision problem

- Decision-making under certainty

- Bayesian inference for county radon levels

- Hierarchical model.

- Inferences.

- Bayesian inference for the radon level in an individual house

- Decision analysis for individual homeowners

- Deciding whether to remediate given a measurement.

- Aggregate consequences of individual decisions

- Applying the recommended decision strategy to the entire country.

- Evaluation of different decision strategies.

- 9.5 Personal vs. institutional decision analysis

- 9.6 Bibliographic note

- 9.7 Exercises

- Part III Advanced Computation

- Chapter 10 Introduction to Bayesian computation

- Normalized and unnormalized densities

- Log densities

- 10.1 Numerical integration

- Simulation methods

- Deterministic methods

- 10.2 Distributional approximations

- Crude estimation by ignoring some information

- 10.3 Direct simulation and rejection sampling

- Direct approximation by calculating at a grid of points

- Simulating from predictive distributions

- Rejection sampling

- 10.4 Importance sampling

- Accuracy and efficiency of importance sampling estimates

- Importance resampling

- Uses ofimportance sampling in Bayesian computation

- 10.5 How many simulation draws are needed?

- 10.6 Computing environments

- The Bugs family of programs

- Stan

- Other Bayesian software

- 10.7 Debugging Bayesian computing

- Debugging using fake data

- Model checking and convergence checking as debugging

- 10.8 Bibliographic note

- 10.9 Exercises

- Chapter 11 Basics of Markov chain simulation

- 11.1 Gibbs sampler

- 11.2 Metropolis and Metropolis-Hastings algorithms

- The Metropolis algorithm

- Relation to optimization

- Why does the Metropolis algorithm work?

- The Metropolis-Hastings algorithm

- Relation between the jumping rule and efficiency of simulations

- 11.3 Using Gibbs and Metropolis as building blocks

- Interpretation of the Gibbs sampler as a special case of the Metropolis-Hastings algorithm

- Gibbs sampler with approximations

- 11.4 Inference and assessing convergence

- Difficulties of inference from iterative simulation

- Discarding early iterations of the simulation runs

- Dependence of the iterations in each sequence

- Multiple sequences with overdispersed starting points

- Monitoring scalar estimands

- Challenges ofmonitoring convergence: mixing and stationarity

- Splitting each saved sequence into two parts

- Assessing mixing using between- and within-sequence variances

- 11.5 Effective number of simulation draws

- Bounded or long-tailed distributions

- Stopping the simulations

- 11.6 Example: hierarchical normal model

- Data from a small experiment

- The model

- Starting points

- Gibbs sampler

- Numerical results with the coagulation data

- The Metropolis algorithm

- Metropolis results with the coagulation data

- 11.7 Bibliographic note

- 11.8 Exercises

- Chapter 12 Computationally efficient Markov chain simulation

- 12.1 Efficient Gibbs samplers

- Transformations and reparameterization

- Auxiliary variables

- Parameter expansion

- 12.2 Efficient Metropolis jumping rules

- Adaptive algorithms

- 12.3 Further extensions to Gibbs and Metropolis

- Slice sampling

- Reversible jump sampling for moving between spaces of differing dimensions

- Simulated tempering and parallel tempering

- Particle filtering, weighting, and genetic algorithms

- 12.4 Hamiltonian Monte Carlo

- The momentum distribution, p(Ï•)

- The three steps of an HMC iteration

- Restricted parameters and areas of zero posterior density

- Setting the tuning parameters

- Varying the tuning parameters during the run

- Locally adaptive HMC

- Combining HMC with Gibbs sampling

- 12.5 Hamiltonian Monte Carlo for a hierarchical model

- Transforming to log Ï„

- 12.6 Stan: developing a computing environment

- Entering the data and model

- Setting tuning parameters in the warm-up phase

- No-U-turn sampler

- Inferences and postprocessing

- 12.7 Bibliographic note

- 12.8 Exercises

- Chapter 13 Modal and distributional approximations

- 13.1 Finding posterior modes

- Conditional maximization

- Newton’s method

- Quasi-Newton and conjugate gradient methods

- Numerical computation of derivatives

- 13.2 Boundary-avoiding priors for modal summaries

- Posterior modes on the boundary of parameter space

- Zero-avoiding prior distribution for a group-level variance parameter

- Boundary-avoiding prior distribution for a correlation parameter

- Degeneracy-avoiding prior distribution for a covariance matrix

- 13.3 Normal and related mixture approximations

- Fitting multivariate normal densities based on the curvature at the modes

- Laplace’s method for analytic approximation of integrals

- Mixture approximation for multimodal densities

- Multivariate t approximation instead of the normal

- Sampling from the approximate posterior distributions

- 13.4 Finding marginal posterior modes using EM

- Derivation of the EM and generalized EM algorithms

- Implementation of the EM algorithm

- Example. Normal distribution with unknown mean and variance and partially conjugate prior distribution

- Extensions of the EM algorithm

- Supplemented EM and ECM algorithms

- Parameter-expanded EM (PX-EM)

- 13.5 Conditional and marginal posterior approximations

- Approximating the conditional posterior density, p(γ|ϕ, y)

- Approximating the marginal posterior density, p(ϕ|y), using an analytic approximation to p(γ|ϕ, y)

- 13.6 Example: hierarchical normal model (continued)

- Crude initial parameter estimates

- Conditional maximization to find the joint mode of p(θ, μ, log σ, log τ|y)

- Factoring into conditional and marginal posterior densities

- Finding the marginal posterior mode of p(μ, log σ, log τ|y) using EM

- Constructing an approximation to the joint posterior distribution

- Comparison to other computations

- 13.7 Variational inference

- Minimization of Kullback-Leibler divergence

- The class of approximate distributions

- The variational Bayes algorithm

- Example. Educational testing experiments

- Proof that each step of variational Bayes decreases the Kullback-Leibler divergence

- Model checking

- Variational Bayes followed by importance sampling or particle filtering

- EM as a special case of variational Bayes

- More general forms of variational Bayes

- 13.8 Expectation propagation

- Expectation propagation for logistic regression

- Extensions of expectation propagation

- 13.9 Other approximations

- Integrated nested Laplace approximation (INLA)

- Central composite design integration (CCD)

- Approximate Bayesian computation (ABC)

- 13.10 Unknown normalizing factors

- Posterior computations involving an unknown normalizing factor

- Bridge and path sampling

- 13.11 Bibliographic note

- 13.12 Exercises

- Part IV: Regression Models

- Chapter 14 Introduction to regression models

- 14.1 Conditional modeling

- Notation

- Formal Bayesian justification of conditional modeling

- 14.2 Bayesian analysis of classical regression

- Notation and basic model

- The standard noninformative prior distribution

- The posterior distribution

- Sampling from the posterior distribution

- The posterior predictive distribution for new data

- Model checking and robustness

- 14.3 Regression for causal inference: incumbency and voting

- Units of analysis, outcome, and treatment variables

- Setting up control variables so that data collection is approximately ignorable

- Implicit ignorability assumption

- Transformations

- Posterior inference

- Model checking and sensitivity analysis

- 14.4 Goals of regression analysis

- Predicting y from x for new observations

- Causal inference

- Do not control for post-treatment variables when estimating the causal effect.

- 14.5 Assembling the matrix of explanatory variables

- Identifiability and collinearity

- Nonlinear relations

- Indicator variables

- Categorical and continuous variables

- Interactions

- Controlling for irrelevant variables

- Selecting the explanatory variables

- 14.6 Regularization and dimension reduction

- Lasso

- 14.7 Unequal variances and correlations

- Modeling unequal variances and correlated errors

- Bayesian regression with a known covariance matrix

- Bayesian regression with unknown covariance matrix

- Variance matrix known up to a scalar factor

- Weighted linear regression

- Parametric models for unequal variances

- Estimating several unknown variance parameters

- General models for unequal variances

- 14.8 Including numerical prior information

- Coding prior information on a regression parameter as an extra ‘data point’

- Interpreting prior information on several coefficients as several additional ‘data points’

- Prior information about variance parameters

- Prior information in the form of inequality constraints on parameters

- 14.9 Bibliographic note

- 14.10 Exercises

- chapter 15 Hierarchical linear models

- 15.1 Regression coefficients exchangeable in batches

- Simple varying-coefficients model

- Intraclass correlation

- Mixed-effects model

- Several sets of varying coefficients

- Exchangeability

- 15.2 Example: forecasting U.S. presidential elections

- Unit of analysis and outcome variable

- Preliminary graphical analysis

- Fitting a preliminary, nonhierarchical, regression model

- Checking the preliminary regression model

- Extending to a varying-coefficients model

- Forecasting

- Posterior inference

- Reasons for using a hierarchical model

- 15.3 Interpreting a normal prior distribution as extra data

- Interpretation as a single linear regression

- More than one way to set up a model

- 15.4 Varying intercepts and slopes

- Inverse-Wishart model

- Scaled inverse-Wishart model

- Predicting business school grades for different groups of students

- 15.5 Computation: batching and transformation

- Gibbs sampler, one batch at a time

- All-at-once Gibbs sampler

- Parameter expansion

- Transformations for HMC

- 15.6 Analysis of variance and the batching of coefficients

- Notation and model

- Computation

- Finite-population and superpopulation standard deviations

- 15.7 Hierarchical models for batches of variance components

- Superpopulation and finite-population standard deviations

- 15.8 Bibliographic note

- 15.9 Exercises

- Chapter 16 Generalized linear models

- 16.1 Standard generalized linear model likelihoods

- Continuous data

- Poisson

- Binomial

- Overdispersed models

- 16.2 Working with generalized linear models

- Canonical link functions

- Offsets

- Interpreting the model parameters

- Understanding discrete-data models in terms of latent continuous data

- Bayesian nonhierarchical and hierarchical generalized linear models

- Noninformative prior distributions on β

- Conjugate prior distributions

- Nonconjugate prior distributions

- Hierarchical models

- Normal approximation to the likelihood

- Approximate normal posterior distribution

- More advanced computational methods

- 16.3 Weakly informative priors for logistic regression

- The problem of separation

- Computation with a specified normal prior distribution

- Approximate EM algorithm with a t prior distribution

- Default prior distribution for logistic regression coefficients

- Other models

- Bioassay example

- Weakly informative default prior compared to actual prior information

- 16.4 Overdispersed Poisson regression for police stops

- Aggregate data

- Regression analysis to control for precincts

- 16.5 State-level opinons from national polls

- 16.6 Models for multivariate and multinomial responses

- Multivariate outcomes

- Extension of the logistic link

- Special methods for ordered categories

- Using the Poisson model for multinomial responses

- 16.7 Loglinear models for multivariate discrete data

- The Poisson or multinomial likelihood

- Setting up the matrix of explanatory variables

- Prior distributions

- Computation

- 16.8 Bibliographic note

- 16.9 Exercises

- Chapter 17 Models for robust inference

- 17.1 Aspects of robustness

- Robustness of inferences to outliers

- Sensitivity analysis

- 17.2 Overdispersed versions of standard models

- The t distribution in place of the normal

- Negative binomial alternative to Poisson

- Beta-binomial alternative to binomial

- The t distribution alternative to logistic and probit regression

- Why ever use a nonrobust model?

- 17.3 Posterior inference and computation

- Notation for robust model as expansion of a simpler model

- Gibbs sampling using the mixture formulation

- Sampling from the posterior predictive distribution for new data

- Computing the marginal posterior distribution of the hyperparameters by importance weighting

- Approximating the robust posterior distributions by importance resampling

- 17.4 Robust inference for the eight schools

- Robust inference based on a t4 population distribution

- Sensitivity analysis based on tν distributions with varying values of ν

- Treating ν as an unknown parameter

- Discussion

- 17.5 Robust regression using t-distributed errors

- Iterative weighted linear regression and the EM algorithm

- Gibbs sampler and Metropolis algorithm

- 17.6 Bibliographic note

- 17.7 Exercises

- Chapter 18 Models for missing data

- 18.1 Notation

- 18.2 Multiple imputation

- Computation using EM and data augmentation

- Inference with multiple imputations

- 18.3 Missing data in the multivariate normal and t models

- Finding posterior modes using EM

- Drawing samples from the posterior distribution of the model parameters

- Extending the normal model using the t distribution

- Nonignorable models

- 18.4 Example: multiple imputation for a series of polls

- Background

- Multivariate missing-data framework

- A hierarchical model for multiple surveys

- Use of the continuous model for discrete responses

- Computation

- Accounting for survey design and weights

- Results

- 18.5 Missing values with counted data

- 18.6 Example: an opinion poll in Slovenia

- Crude estimates

- The likelihood and prior distribution

- The model for the ‘missing data’

- Using the EM algorithm to find the posterior mode of θ

- Using SEM to estimate the posterior variance matrix and obtain a normal approximation

- Multiple imputation using data augmentation

- Posterior inference for the estimand of interest

- 18.7 Bibliographic note

- 18.8 Exercises

- Part V: Nonlinear and Nonparametric Models

- Chapter 19 Parametric nonlinear models

- 19.1 Example: serial dilution assay

- Laboratory data

- The model

- Inference

- Comparison to existing estimates

- 19.2 Example: population toxicokinetics

- Background

- Toxicokinetic model

- Difficulties in estimation and the role of prior information

- Measurement model

- Population model for parameters

- Prior information

- Joint posterior distribution for the hierarchical model

- Computation

- Inference for quantities of interest

- Evaluating the fit of the model

- Use of a complex model with an informative prior distribution

- 19.3 Bibliographic note

- 19.4 Exercises

- Chapter 20 Basis function models

- 20.1 Splines and weighted sums of basis functions

- 20.2 Basis selection and shrinkage of coefficients

- Shrinkage priors

- 20.3 Non-normal models and regression surfaces

- Other error distributions

- Multivariate regression surfaces

- 20.4 Bibliographic note

- 20.5 Exercises

- Chapter 21 Gaussian process models

- 21.1 Gaussian process regression

- Covariance functions

- Inference

- Covariance function approximations

- Marginal likelihood and posterior

- 21.2 Example: birthdays and birthdates

- Decomposing the time series as a sum of Gaussian processes

- An improved model

- 21.3 Latent Gaussian process models

- 21.4 Functional data analysis

- 21.5 Density estimation and regression

- Density estimation

- Density regression

- Latent-variable regression

- 21.6 Bibliographic note

- 21.7 Exercises

- Chapter 22 Finite mixture models

- 22.1 Setting up and interpreting mixture models

- Finite mixtures

- Continuous mixtures

- Identifiability of the mixture likelihood

- Prior distribution

- Ensuring a proper posterior distribution

- Number of mixture components

- More general formulation

- Mixtures as true models or approximating distributions

- Basics of computation for mixture models

- Crude estimates

- Posterior modes and marginal approximations using EM and variational Bayes

- Posterior simulation using the Gibbs sampler

- Posterior inference

- 22.2 Example: reaction times and schizophrenia

- Initial statistical model

- Crude estimate of the parameters

- Finding the modes of the posterior distribution using ECM

- Normal and t approximations at the major mode

- Simulation using the Gibbs sampler

- Possible difficulties at a degenerate point

- Inference from the iterative simulations

- Posterior predictive distributions

- Checking the model

- Expanding the model

- Checking the new model

- 22.3 Label switching and posterior computation

- 22.4 Unspecified number of mixture components

- 22.5 Mixture models for classification and regression

- Classification

- Regression

- 22.6 Bibliographic note

- 22.7 Exercises

- Chapter 23 Dirichlet process models

- 23.1 Bayesian histograms

- 23.2 Dirichlet process prior distributions

- Definition and basic properties

- Stick-breaking construction

- 23.3 Dirichlet process mixtures

- Specification and Polya urns

- Blocked Gibbs sampler

- Hyperprior distribution

- 23.4 Beyond density estimation

- Nonparametric residual distributions

- Nonparametric models for parameters that vary by group

- Functional data analysis

- 23.5 Hierarchical dependence

- Dependent Dirichlet processes

- Hierarchical Dirichlet processes

- Nested Dirichlet processes

- Convex mixtures

- 23.6 Density regression

- Dependent stick-breaking processes

- 23.7 Bibliographic note

- 23.8 Exercises

- Appendixes

- Appendix A Standard probability distributions

- A.1 Continuous distributions

- Uniform

- Univariate normal

- Lognormal

- Multivariate normal

- Gamma

- Inverse-gamma

- Chi-square

- Inverse chi-square

- Exponential

- Weibull

- Wishart

- Inverse-Wishart

- LKJ correlation

- t

- Beta

- Dirichlet

- Constrained distributions

- A.2 Discrete distributions

- Poisson

- Binomial

- Multinomial

- Negative binomial

- Beta-binomial

- A.3 Bibliographic note

- Appendix B Outline of proofs of limit theorems

- Mathematical framework

- Convergence of the posterior distribution for a discrete parameter space

- Convergence of the posterior distribution for a continuous parameter space

- Convergence of the posterior distribution to normality

- Multivariate form

- B.1 Bibliographic note

- Appendix C Computation in R and Stan

- C.1 Getting started with R and Stan

- C.2 Fitting a hierarchical model in Stan

- Stan program

- R script for data input, starting values, and running Stan

- Accessing the posterior simulations in R

- Posterior predictive simulations and graphs in R

- Alternative prior distributions

- Using the t model

- C.3 Direct simulation, Gibbs, and Metropolis in R

- Marginal and conditional simulation for the normal model

- Gibbs sampler for the normal model

- Gibbs sampling for the t model with fixed degrees of freedom

- Gibbs-Metropolis sampling for the t model with unknown degrees of freedom

- Parameter expansion for the t model

- C.4 Programming Hamiltonian Monte Carlo in R

- C.5 Further comments on computation

- C.6 Bibliographic note

- References

- Author Index

- Subject Index

Reviews

There are no reviews yet.